A post by Jake Spiteri, Compass PhD student.

Recent advancements in kernel methods have introduced a framework for nonparametric statistics by embedding and manipulating probability distributions in Hilbert spaces. In this blog post we will look at how to embed marginal and conditional distributions, and how to perform probability operations such as the sum, product, and Bayes’ rule with embeddings.

Embedding marginal distributions

Throughout this blog post we will make use of reproducing kernel Hilbert spaces (RKHS). A reproducing kernel Hilbert space is simply a Hilbert space with some additional structure, and a Hilbert space is just a topological vector space equipped with an inner product, which is also complete.

We will frequently refer to a random variable which has domain

endowed with the

-algebra

.

Definition. A reproducing kernel Hilbert space on

with associated positive semi-definite kernel function

is a Hilbert space of functions

. The inner product

satisfies the reproducing property:

. We also have

.

It may be helpful to think of as

; all functions in our space may be written as a sum of feature maps

. This can help us visualise what functions belong to our RKHS. We should also note that kernel functions are not limited to

— kernel functions have been defined for inputs such as strings, graphs, images, etc.

Definition. Let the space of all probability measures on

. We can embed any probability distribution

into the RKHS

with associated kernel

via the kernel mean embedding:

The embedding exists and belongs to the RKHS

if

. The kernel mean embedding is representer of expectation in the RKHS: it satisfies the reproducing property

. I.e. can compute expectations of functions

with respect to

by taking an inner product between

and

.

A useful property of the kernel mean embedding is that it captures all characteristics of a probability distribution, for a good choice of kernel. Provided that the feature mapping induced by the kernel is injective, the embedding is unique. A kernel which corresponds to an injective feature mapping is said to be characteristic. That is, for , if

then we must have

for a characteristic kernel. Choosing an appropriate kernel function for a given problem is not straightfoward, and it is common to use a ‘default’ kernel choice such as the Gaussian or Laplace kernel, as they are characteristic. We should note here that whilst the mapping from distribution to kernel mean embedding is unique, reconstructing the original density function is non-trivial.

Empirical estimator. In practice it is unlikely that we would have access to the distribution , and so rather than compute the kernel mean embedding

, we can only compute its empirical estimate. Given an i.i.d. sample

from

, we can compute the unbiased estimate

. This empirical estimator has

-consistency.

Above we have introduced a quantity which makes use of the mean feature mapping in the RKHS. Similarly, we may also make use of the covariance of feature mappings in the RKHS.

Definition. Let be a random variable taking values on domain

, and let

and

be RKHSs with kernel functions

and

on domains

and

respectively. Let

and

be the feature maps corresponding to kernels

and

respectively. Then we define the (uncentered) cross-covariance operator

as

where denotes the tensor product, and

denotes the joint probability distribution of

The cross-covariance operator exists if and

. It is useful to note that

can be identified as the kernel mean embedding of the joint distribution in the tensor product space

. We emphasise that the cross-covariance operator is an operator mapping from one RKHS to another. We see that when acting on an element

we have

.

Empirical estimator. Given an i.i.d. sample from the joint probability distribution

we have the following empirical estimator of the cross-covariance

where and

are row vectors of functions in

and

respectively.

Embedding conditional distributions

Using the cross-covariance operator, we can now define the conditional mean embedding. We want to extend the notion of embedding a marginal distribution to that of embedding a conditional distribution

and

for some

. Embeddings which capture the dependence between variables will allow us to develop more complex algorithms, and will allow us to produce kernel versions of elementary probability operations such as the sum rule and product rule.

We start by specifying two conditions which we want the conditional mean embedding to satisfy based on our definiton of the kernel mean embedding. We will use and

to denote the embeddings of the conditional distributions

and

respectively.

These conditions are

.

Note that for a given the embedding of

,

, is an element in

, whereas the embedding of

,

, is an operator which traces out a family of elements in the RKHS

.

It is shown in (Song, 2009) that the following definition satisfies the above properties.

Definition. Let , and

. Then we can define the conditional mean embeddings of the conditional distributions

and

, for

, as

under the assumption that . It is easy to produce examples in which this does not hold, and so we often replace the above inverse with a Tikhonov-regularised inverse, and consider the embeddings to be approximations of the true embeddings.

Empirical estimator. Given a set of i.i.d. samples from the joint distribution

, the conditional mean embedding can be estimated using

,

where for

the Gram matrix associated with

, and

, and

a regularisation parameter.

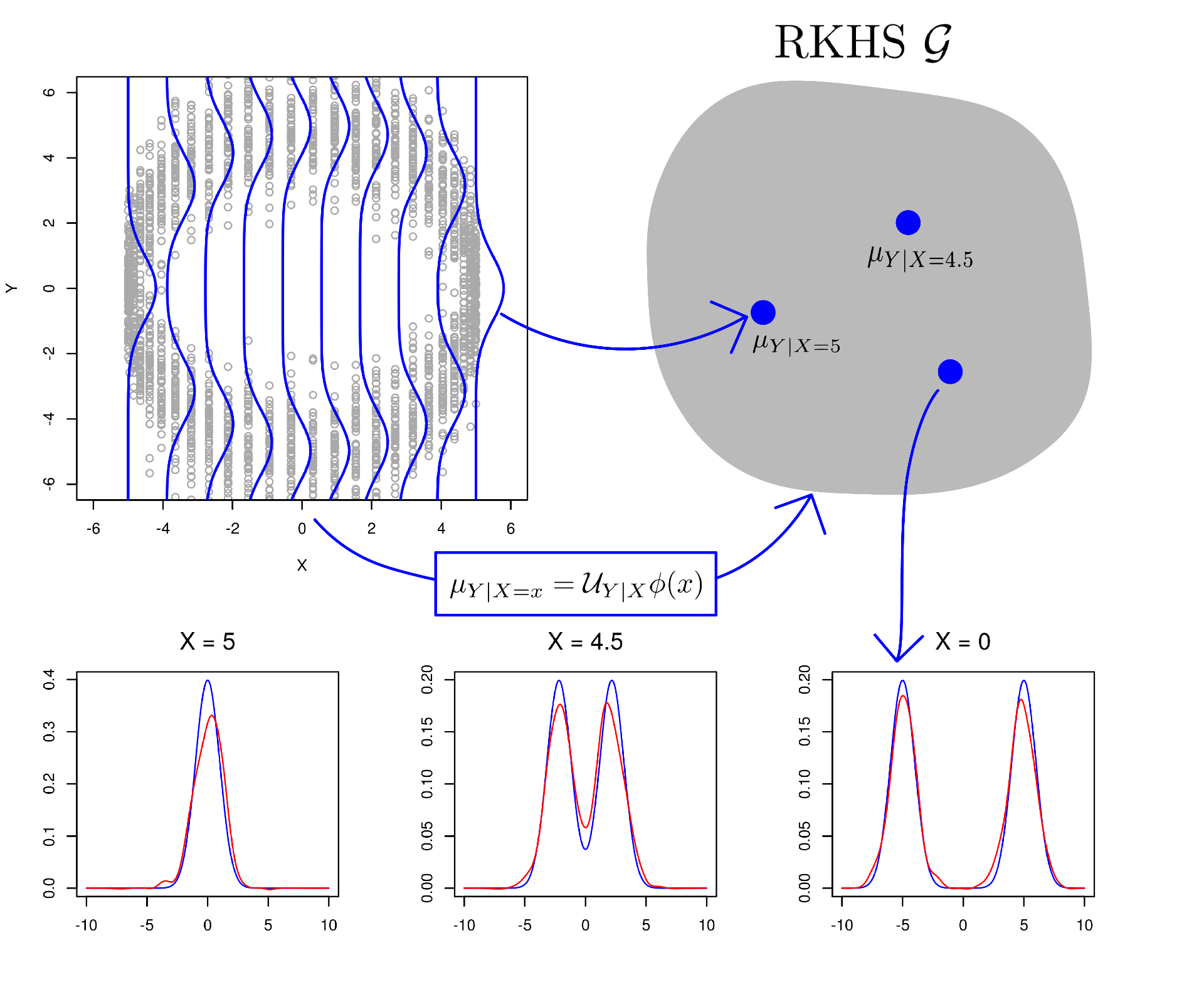

An example. Here we look at a simple example of the conditional mean embedding. We generate data in a method inspired by Schuster et al. (2020). We start by drawing a circle on the -plane, and specifying 50 equidistant points on the circle. Each of these points is the mean of a Gaussian distribution over

, and we draw 50 samples from each distribution with equal probability. The conditional distribution

in this scenario is a mixture of Gaussians. The top left plot of Figure 1 shows the data sampled from these Gaussians in grey, with conditional distributions overlaid in blue. The conditional mean embedding embeds each conditional distribution in the RKHS

illustrated in the top right plot, via

. In the second row of Figure 1 we see three examples of the reconstructed conditional densities in red. These reconstructed densities have been obtained via the nonparametric estimator proposed in Kanagawa et al. (2014).

Figure 1. A visualisation of the conditional mean embedding.

Operations: the Sum, Product, and Bayes’ rule

Using the conditional mean embedding and the cross-covariance operator, we can produce versions of the sum, product, and Bayes’ rule which allow us to manipulate distributions embedded in an RKHS.

Recall the sum rule and product rule: , and

.

Kernel Sum rule. The sum rule allows us to compute the marginal density of a variable given the joint distribution over variables

and

, by marginalising out

.

Using the law of total expectation, we have . Thus, we have

.

Kernel Product rule. To construct a kernel product rule, we consider the tensor product feature map . We can factorise the embedding

in two ways using the law of total expectation

,

.

Let , and

. Then we can rewrite the above as

.

This looks similar to the sum rule, but we see that there is an additional copy of the variables and

passed as input, which is not marginalised out as is done in the sum rule.

Kernel Bayes rule. The kernel Bayes’ rule (KBR) is a nonparametric method which allows for Bayesian inference in the absence of a parametric model or likelihood. In KBR we embed the prior and likelihood in an RKHS via the kernel mean embedding and cross-covariance operator respectively, and use the sum and product rules to manipulate the embeddings in the RKHS.

The presentation of KBR shown here is that given in Muandet et al. (2017), which provides a concise summary of the original work (Fukumizu et al., 2013). Our aim is to compute the embedding of the posterior given a prior distribution

. We obtain the embedding of the posterior distribution as

, where we use a superscript

to denote dependence on the prior

. More precisely, we have

,

where the cross-covariance operators depend on the prior, and are given by

.

These results follow from the product and sum rule respectively, where we have replaced the input feature map with the tensor product feature map

. The embeddings

and

are simply the embedded prior distribution corresponding to the feature maps

and

.

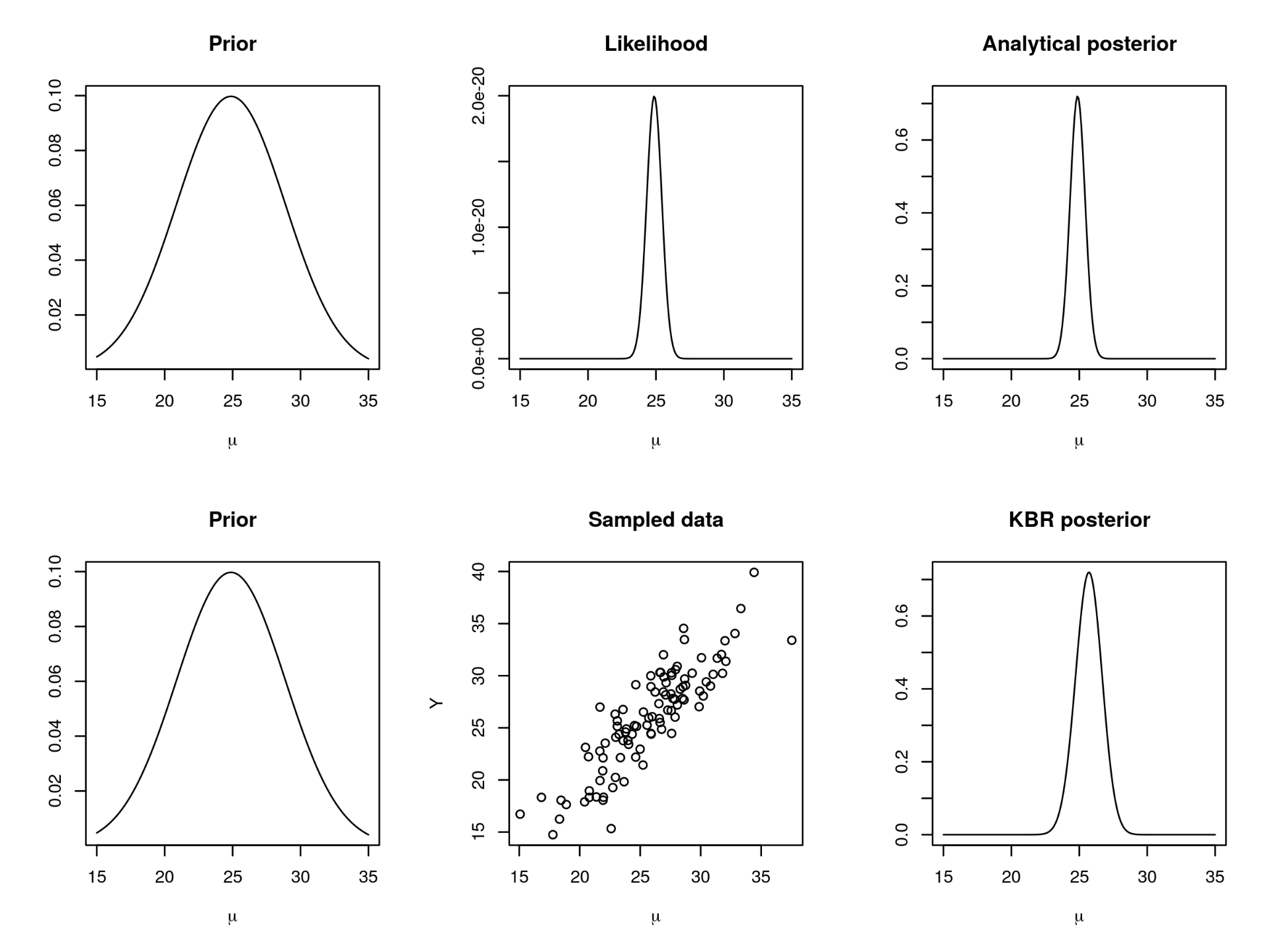

A KBR example. Here we look at a simple example of Bayesian inference without a likelihood, similar to that given in Fukumizu et al. (2013). In this toy example we assume that we cannot write down the likelihood function, but we can sample from it. We generate data from a Normal distribution with mean , and we assume the variance

is known. We place a Normal prior on

with mean equal to the mean of the observed data, and standard deviation 4. Figure 2 below shows two ways of approaching posterior inference: in the first row we use the fact that the prior and likelihood are conjugate, and we derive the analytical posterior distribution; in the second row we embed the prior distribution and the joint distribution in the RKHS and use KBR to obtain an embedding of the posterior.

Figure 2. Top row: Posterior inference when the prior and likelihood are conjugate. Bottom row: Posterior inference via KBR when the likelihood is intractable.

Figure 2. Top row: Posterior inference when the prior and likelihood are conjugate. Bottom row: Posterior inference via KBR when the likelihood is intractable.

References

Fukumizu, K., L. Song, and A. Gretton (2013). “Kernel Bayes’ rule: Bayesian inference with positive definite kernels”. In: The Journal of Machine Learning Research 14.1, pp. 3753–3783.

Kanagawa, M. and K. Fukumizu (2014). “Recovering distributions from Gaussian RKHS embeddings”. In: Artificial Intelligence and Statistics. PMLR, pp. 457–465.

Muandet, K., K. Fukumizu, B. Sriperumbudur, and B. Schölkopf (2017). “Kernel Mean Embedding of Distributions: A Review and Beyond”. In: Foundations and Trends in Machine Learning.

Schuster, I., M. Mollenhauer, S. Klus, and K. Muandet (2020). “Kernel conditional density operators”. In: International Conference on Artificial Intelligence and Statistics. PMLR, pp. 993–1004.

Song, L., J. Huang, A. Smola, and K. Fukumizu (2009). “Hilbert space embeddings of conditional distributions with applications to dynamical systems”. In: Proceedings of the 26th Annual International Conference on Machine

Learning, pp. 961–968.