A post by Ettore Fincato, PhD student on the Compass programme.

This post provides an introduction to Gradient Methods in Stochastic Optimisation. This class of algorithms is the foundation of my current research work with Prof. Christophe Andrieu and Dr. Mathieu Gerber, and finds applications in a great variety of topics, such as regression estimation, support vector machines, convolutional neural networks.

We can see below a simulation by Emilien Dupont (https://emiliendupont.github.io/) which represents two trajectories of an optimisation process of a time-varying function. This well describes the main idea behind the algorithms we will be looking at, that is, using the (stochastic) gradient of a (random) function to iteratively reach the optimum.

Stochastic Optimisation

Stochastic optimisation was introduced by [1], and its aim is to find a scheme for solving equations of the form given “noisy” measurements of

[2].

In the simplest deterministic framework, one can fully determine the analytical form of , knows that it is differentiable and admits an unique minimum – hence the problem

is well defined and solved by .

On the other hand, one may not be able to fully determine because his experiment is corrupted by a random noise. In such cases, it is common to identify this noise with a random variable, say

, consider an unbiased estimator

s.t.

and to rewrite the problem as

Example

Consider a repeated experiment which gives pairs of input-output iid observations ,

with

. Assume that

depends on

in the following way:

where is an error term,

is an intercept and

. This is for example a classical set-up of a Linear Regression procedure, where one generally assumes

. More generally,

could be a polynomial, a spline, a neural network.

In order to find the best fit, one generally assumes that is identified by the choice of

, that is

if

, and investigates the Mean Squared Error problem

where

.

In general, we do not know the true distribution of and we only have access to this sample, hence we can only evaluate

up to noise. This is a classical stochastic optimisation problem, which in several cases can be tackled by a recursive algorithm such as Stochastic Gradient Descent.

Stochastic Gradient Descent and Dynamics

The Stochastic Gradient Descent algorithm is the core of stochastic gradient methods. It is based on the simple idea of iteratively following the direction suggested by the gradient at the -th iteration. It is interesting to look at it from a dynamical systems point of view, that is, as a noisy approximation of a certain ordinary differential equation (ODE).

Noisy approximation of ODEs

Suppose we have an ODE

describing the dynamics of a system. A well known scheme to numerically approximate the solution of such equation is the Euler Scheme

where is the so-called time-step. If one only has noisy evaluations of

, he can replace it by an unbiased estimator

, where

is some random variable.

Hence, in practice, the algorithm generates, independently for each , a random variable

, and computes

.

By unbiasedness of , we can write

where is a sequence of zero-mean random variables uncorrelated with the past (a martingale difference sequence). Hence the recursion above is equivalent to

.

This last formulation is the most used in the literature.

A further extension of the scheme is recovered by introducing non-constant time-steps, say with

, writing

.

The above is a stochastic approximation scheme which differs from the Euler’s scheme in the fact that it involves a noise and time-varying steps

[2].

Ensuring a good exploration of the space

These time-decaying steps must satisfy some stability criteria.

- The algorithm needs to explore the space for long enough: hence it is common to assume

.

- Moreover, one needs the error component

to go to zero quickly enough: hence one generally assumes

, which can be shown to be equivalent to

when

are iid with zero mean and finite variance [2].

Stochastic Gradient Descent

Now come back to the example of finding the minimum of a function for which we only have noisy measurements. Let

In this case the the above scheme becomes

(1)

where we have used for

unbiased estimator of

.

It is called Stochastic Gradient Descent (SGD), and represents a noisy discretisation of

which is a well known ODE and is known to converge to the optimum s.t.

when

is convex, or regular enough [2]. Intuitively, the ODE says that the underlying dynamics of

should follow the gradient of

.

Stochastic gradient descent can be shown to converge if the target function is smooth enough. See e.g. [3][4].

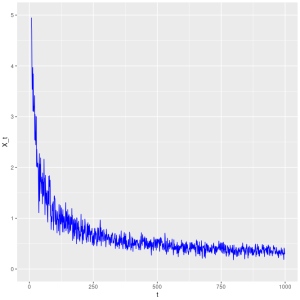

SGD and Stochastic Differential Equations

One could also see the SGD recursion as a time-discretisation of a stochastic differential equation (SDE). Under regular behaviour of time-steps, and if we assume iid

, SGD approximates the below Itô diffusion SDE

where is a standard Brownian Motion. If familiar with diffusion processes, one can see SGD as a Brownian motion with gradient drift and diffusion part

. See figure below for an example of what a simple SGD motion looks like.

Variations and extensions

We briefly present two important variations of SGD method which are often seen in the literature.

Projected Stochastic Gradient Descent

Suppose now we are solving a constrained optimisation problem, that is, let be a subspace of our original space, say

, and let

be the function we want to optimise. The constrained problem is therefore

.

To solve this problem in a “SGD style”, one can project every SGD step on . With an euclidean projection, this is equivalent to the following recursion

where is a so-called sub-gradient, and the easiest example for its choice is an unbiased estimator

of

, as previous sections. More generally, it is a function that should resemble certain fundamental algebraic properties of a gradient.

By easy algebra, we see that the above is equivalent to

Hence we see that an immediate extension of this would be to replace the euclidean distance with some more general definition of divergence. In the literature, this is done by introducing a so-called Bregman divergence

, defining the class Stochastic Mirror Descent algorithms.

Stochastic Mirror Descent

Miming an euclidean distance, for which it holds that

the Bregman divergence is defined as

where is a so-called Legendre function. Examples for

are

- Energy

in which case we recover an euclidean projection

- Boltzmann-Shannon Entropy

- Fermi-Dirac Entropy

Using a Bregman divergence, we therefore get a more general recursion

which is called Stochastic Mirror Descent (SMD) recursion. Hence, a SMD move is a tradeoff between following the slope of the (sub) gradient, as in a SGD algorithm, and moving according to the Bregman divergence. This generalisation allows to tackle in a “SGD style” a great variety of problems, such as non-convex problems and quadratic inverse problems [5].

References

[1] Robbins, H. and Monro, S. (1951). A Stochastic Approximation Method. The Annals of

Mathematical Statistics, 22(3):400 – 407.

[2] Borkar, V. (2008). Stochastic Approximation: A Dynamical Systems Viewpoint. Cam-

bridge University Press.

[3] Turinici, G. (2021). The convergence of the stochastic gradient descent (sgd) : a self-

contained proof. Technical report.

[4] Patel, V., B. Tian, and S. Zhang (2021). Global convergence and stability of stochastic

gradient descent. CoRR abs/2110.01663.

[5] Bolte, J., Sabach, S., Teboulle, M., and Vaisbourd, Y. (2018). First order methods bey-

ond convexity and lipschitz gradient continuity with applications to quadratic inverse

problems. SIAM Journal on Optimization, 28(3):2131–2151.