A post by Alessio Zakaria, PhD student on the Compass programme.

Introduction

Probability theory is a branch of mathematics centred around the abstract manipulation and quantification of uncertainty and variability. It forms a basic unit of the theory and practice of statistics, enabling us to tame the complex nature of observable phenomena into meaningful information. It is through this reliance that the debate over the true (or more correct) underlying nature of probability theory has profound effects on how statisticians do their work. The current opposing sides of the debate in question are the Frequentists and the Bayesians. Frequentists believe that probability is intrinsically linked to the numeric regularity with which events occur, i.e. their frequency. Bayesians, however, believe that probability is an expression of someones degree of belief or confidence in a certain claim. In everyday parlance we use both of these concepts interchangeably: I estimate one in five of people have Covid; I was 50% confident that the football was coming home. It should be noted that the latter of the two is not a repeatable event per se. We cannot roll back time to check what the repeatable sequence would result in.

The two positions outlined above are the rather extreme ends of the debate with myriad positions lying between and outside them. In statistical practice, the two viewpoints lead to different mathematical techniques. Forgoing technical detail, Bayesian statisticians think that we should incorporate prior knowledge or belief into our process of distilling information from the world, potentially sacrificing our ability to make frequency based claims about our phenomena of interest. Frequentist statisticians, however, reject subjective prior knowledge and demand all claims to be tied intrinsically to frequency, losing simple interpretations of our uncertainty in doing so. Making sense of this debate is essential for the applied statistician in order to perform their daily tasks. This is no easy feat as the literature is multitudinous and historic, resulting in the short-of-time statistician lacking confidence in any conclusion they may reach. As will be discussed, this debate is a repeating of an old one around whether probability is objective (properties of external objects independent of human beings) or whether probability is subjective (existing only within peoples minds relating to our knowledge about the world).

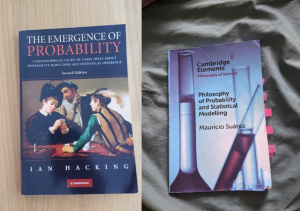

In this blogpost I will briefly explore two contemporary approaches to addressing the problem outlined above. The first of these is Ian Hacking’s 1975 work The Emergence of Probability and the more recent long form essay by Mauricio Suarez, The Philosophy of Probability and Statistical Modelling published in 2021. Both pieces share a core attitude of a pluralism about probability, i.e. that is there is no one thing that is probability, nor is there a single unified lens through which it can be interpreted. I will summarise their work and arguments with a view to what the practising applied statistician can take from their work.

The Emergence of Probability by Ian Hacking

The Emergence of Probability examines the history of probability theory in order to investigate the preconditions and conceptual space that lead to its development. In doing so Hacking indirectly demonstrates that the concepts which mutated into and facilitated probability were themselves dual in the sense that they contained both subjective and objective components, and that the very early practitioners worked within this dual space.

In The Emergence of Probability, Hacking traces the birth of a formalised mathematics of probability in Europe to a decade in the 17th century. For Hacking this is remarkably late — gambling and dice had been around since prehistoric times and a skilled probability theorist would have been able to win big. Much of the early part of the book is devoted to Hacking’s explanation of this disparity. The first chapter sets up several hypotheses as to the late emergence, ranging from high levels of piety to lack of economic need, but deems each to be uniquely unsuitable. Hacking spends the next few chapters outlining his own explanation as to the disparity of emergence which takes the duality introduced in the introduction as crucial. Hacking begins his argument by noting that the duality is present from the inception of probability theory, with a prototypical example being mathematician and philosopher Blaise Pascal. Pascal engaged in discussion with fellow mathematician Fermat on problems within games of chance, but also took a subjective approach in his famous wager on the existence of God. Within the decade Hacking isolates as being the decade of emergence, he finds many different theoreticians working on subjectivist applications and objectivist applications with no clear throughline being identifiable.

The missing ingredient Hacking posits as causing the delay was a specific concept of evidence that precluded the development of probability. The type of evidence that was missing is what we would understand today as inductive evidence or, as Hacking might put it, of things pointing beyond themselves. Consider the type of evidence needed to establish all ravens being black — we would observe a number of ravens and try to draw conclusions about all ravens. It is important to note that by denying this specific concept of evidence existed Hacking was not suggesting that people did not reason inductively, rather that it was not considered to establish claims in the way that it might today or compared to other forms of evidence. What was in place of this type of evidence so familiar to us today were two distinct concepts. The first was pure Demonstration, the derivation of a conclusion from first principles typified by mathematics and physics. The second was Opinion, being the evidence that low scientists such as astrologers or physicians traded in. In the period in question it was opinion that could be probable, though with profoundly different meanings to what we may be familiar with.

An opinion being probable meant that it was approvable by those who were wise or learned. Hacking points to phrases such as “the probable doctor” or “the house was very probable” to illustrate the alienness of old terms. The phrases express the fact that the doctor and house were thought well of by people in the know. The slow translation of probability from this understanding to the new modern understanding reflects the development of inductive evidence as an accepted type of evidence.

Given that low scientists could not Demonstrate, the pre-reflective, pseudo-inductive evidence that Hacking shows they used in place of induction (and eventually evolved into inductive evidence) was the Sign. Signs are a strange concept that is hard to pin down but at their essence are simply patterns or links that emerged in some form or another. A goal of early low scientists concerned establishing the difference between natural Signs (Signs relating to the external world) and conventional signs (Signs relating to human constructs). An example of a natural Sign may be the number of points on a deer’s antlers whereas a conventional Sign may be something like the names of metals or stars. Conventional Signs were given more weight than we may now give them. Some physicians of the time believed that syphillis could be treated with mercury due to Syphillis being /Signed/ by the marketplace (where it was caught) since Mercury was the God of the marketplace and shared the same name with the metal. Conventional Signs had a power now confusing to us.

As Signs were opinion, the most they could hope to be was probable, i.e. attested to by some authority. For natural signs however there was no clear idea of who an authority could be. Hacking shows how, throughout the surrounding centuries, people developed the idea that natural signs were attested to by the Authority of Nature itself. This could be understood as God or The Way Things Really Are. To establish a probable natural sign, its trustworthiness needed to be established which was achieved via establishing the stability and frequency of the signs in question. And it can now be seen that the probable natural sign transformed into our modern day inductive evidence and associated probability theory. The objective/subjective duality in the preconcept of natural probable Sign is clear: Signs are purveyors of opinion — they are concerned with the subjective approval of claims or judgements. To establish the trustworthiness (probability in the ancient sense) of such natural Signs we are concerned with their relative frequency and stability which constitute objective notions given that there was no obvious authority on which to depend. Thus even the preconceptual space in which probability lived was dual.

Whilst the above presents a very conceptual explanation of a preconcept of probability, Hacking supports this analysis through the words of philosophers, mathematicians and scientists in centuries prior to and including the decade of emergence. These works speak of conventional and natural signs, and debates over how to to decide between two claims that had different authorities testify to them i.e. were probable in the old sense. This appears in the works of writers like Hobbes and even in The Port Royal Logic of which Pascal was a contributor, considered a seminal text in the development of probability linking directly probability theory to philosophical issues around probable natural signs. Writers like Galileo also discussed notions of signs and the authority of nature.

Hacking, in this text and his other work in probability theory and statistics, argues, partly from the above historical analysis but in conjunction with other arguments, that our theories of probability should embrace the objective and subjective components of probability. Not one to mince words he jokingly refers to pure Frequentists or Bayesians as “extremists”.

What we can take from The Emergence of Probability is that one of the precursor concepts that precluded probability theory was itself intrinsically dual and that the earliest practitioners were aware of and worked within this dual space. To my mind, Hacking effectively demonstrates that any interpretation or explanation of probability /must/ account for, or explain, the duality that has been around since its conception, which his pluralism certainly does.

The Philosophy of Probability and Statistical Modelling by Mauricio Suarez

In The Philosophy of Probability and Statistical Modelling, Mauricio Suarez extends Hacking’s analysis of pluralism and historical analysis. Suarez analyses modern interpretations of probability theory and argues that “…each fails to completely describe probability precisely because it doesn’t take into account the duality”. He goes beyond Hacking’s pluralism in advocating a pluralism within objective probability itself, and connects this pluralism to the practice of scientific and statistical modelling.

During Suarez’s account of historical interpretations, he visits modern subjectivist notions of probability including personalism developed by Frank Ramsey, and the logical interpretation championed by John Maynard Keynes. The first of these interpretations regards probability as being the degree of belief we have in a claim or an event occurring which reflects the modern Bayesian viewpoints. The logical interpretation instead champions probability as the degree to which a proposition is entailed by some background knowledge. Suarez states objections to both of these interpretations, a common objection being that they can only be practically understood and utilised by incorporating objective components (an objective entailment given all relevant background knowledge or a underlying chance that the degree of belief approximates) which undermines the subjectivist underpinnings.

Suarez then moves on to the concepts that concern much of the rest of the book (i.e. objective probability) by reviewing frequentist and propensity interpretations. Objective probability is centred as it is the primary concern of statistical practice. The frequentist interpretation was discussed in the introduction: there are subtle sub-interpretations that focus on real versus hypothetical frequencies, but both encompass the notion that the probability of an event should be related to the ratios of real or hypothetical series of outcomes. The Propensity Interpretation was first advocated by famous philosopher of science Karl Popper to address the seemingly fundamental role probability played in quantum mechanics. The propensity interpretation takes probability to be an inherent property of “chancy” objects. That is, probability is a primitive notion that objects are disposed to that cannot be reduced, i.e. that is /real/ randomness in the world. Both interpretations have arguments against them, including the fact that finite frequencies often can /and do/ deviate from what we assign as the underlying probability, and both notions equally suffer from notions of subjectivity in choosing reference classes and assigning probabilities to hypothetical or chancy objects.

I have only briefly recounted the objections to each interpretation raised by Suarez in the text and references he cites, but it is clear that there is no outright winner in the modern arrangement of interpretations and each suffers from significant flaws.

Suarez takes it as given that probability has both subjective and objective notions, viewing both Hacking’s work as persuasive and the above mentioned failure of modern philosophies to reconcile subjective and objective notions to be damning. He then attempts to bridge the gap between the various objective interpretations, arguing that both frequentist and propensity interpretations provide some degree of explanation of probabilistic phenomena. He posits that, in explanations of objective variation, we both currently do and will continue to use both frequency properties of probabilities and fundamental propensities of systems and things to provide explanations. Suarez introduces his tiered tripartite conception which explains observed frequencies via the hypothetical frequencies of chancy systems, explains those by single-shot probabilities (a middle layer we have not discussed), and finally those single shot probabilities are explained by primitive dispositions as propensities. In this sense we take both propensity and frequentist interpretations to have some stock in explanations and as separately valuable and real.

Suarez is explicit about his goal and concern that philosophy be constrained and informed by how chance is used in statistical methodology and sees his tripartite conception as reflecting differing parts of statistical practice. He spends the latter half of the book showing how the various concepts within his tripartite conception show up in statistical modelling, which he denotes the complex nexus of chance. An example he lingers on is a now infamous set of epidemiological models. He explicitly discusses how propensities, single-case probabilities, and frequency notions enter epidemiological models, using notions such as dispositional properties of organisms, transmissibility of the pathogen in a certain environment, and frequencies ratios of susceptible and infected people respectively. Suarez in this section further discusses different statistical models and the philosophical concepts that underpin them.

Suarez’s work can be understood as demonstrating the lack of success at providing a unifying framework for probability in the modern period. Suarez takes this as reinforcing Hacking’s work inasmuch as we must take the duality serious. Suarez responds by advocating even stronger pluralism at the objective level, centring the importance of statistical practice in future philosophical work.

Analysis and Conclusion

Suarez, as a philosopher, is largely concerned with relating the theory of probability to practice. For those of us who practice statistics we may rather be concerned with an inverse question of sorts. As applied statisticians we are interested in helping clients in achieving their goals under uncertainty or variation. In this sense we are interested in making inferences or predictions given some context or data. These inferences can be seen as judgements that license certain decisions or viewpoints. It is our hope that the techniques we employ establish the judgements that meet the needs of the client and that suitably license or rule out decisions or viewpoints they want to make or have. To have a fighting chance at this, we need to understand what philosophical and mathematical assumptions we are employing in a given technique, and what philosophical and analytic content our conclusions attain. Modern statistical practice, as far as I understand it, does not achieve this. We as statisticians often lack the tools and skills to critically examine and understand the work we do in a wider context. Techniques are used because they are the ones we are most familiar with or are simply those that have been used in the past.

I believe that the pluralism represented by Suarez and Hacking provide a space in which statisticians can achieve and explore many of the different needs required of them. This plurality of needs reflects that of the differing needs of clients and the multitudinous techniques available in statistical practice. Some clients may want to establish some causal hypothesis beyond all doubt; some may want to assess the likelihood of a one-shot event; evaluate truly how probable a given outcome is or to explore a set of claims. In her 2018 book Statistical Inference as Severe Testing, Deborah Mayo outlines a normative view of statistical inference that aims to severely test a claim by exposing it to a trial that is highly capable of finding flaws in the claim. Mayo is indeed correct that some clients may wish to severely test a claim using statistics however, as she herself notes, “[she’s] not saying that statistical inference is always about formal statistical testing”. Clients have a plurality of needs which may require a plurality of techniques to meet them, each with a potentially differing philosophy.

Another practical aspect of pluralism involves taking to heart the lessons of statistics itself. In statistics, we tackle fallibilism and uncertainty head on by enumerating possibilities, quantifying their likelihood, and understanding their conclusions. To not apply this to meta-questions within our very field would be to forgo one of the greatest insights statistics has to offer us in approaching the complexities of the world around us.

I must stress a cautionary warning here — it is not my intention to promote a carte blanche to employ whatever statistical technique one wishes without any justification. To properly take advantage of pluralism as a stance we must be committed to analysing and understanding the consequences of our work. It may turn out that certain techniques do not provide the judgements our clients typically want but we must do the work to discover this first. To summarise, agnosticism or pluralism need not be born from a lack of intellectual commitment but from a genuine belief in the inability to divide the two — the historical literature gives us pause as to whether we will be able to reduce one concept to another. Furthermore, pluralism offers a stance for us to explore the consequences and implicit commitments we make when modelling. This will enable us to meet our clients demands in a more sophisticated and sincere way. This requires us as statisticians to be somewhat acquainted with the philosophical literature, and to think philosophically about the mathematics that we employ when modelling the world.

Both Hacking and Suarez’s books are worth reading for anybody interested in probability theory as I have barely scratched the surface of their work. The Emergence of Probability contains fascinating historical titbits around the earliest dice and the mathematical mistakes of Leibniz. Suarez outlines fascinating analysis of the differing types of scientific models and a well thought out history of modern interpretations. They represent the forefront of pragmatic philosophies of statistics upon which foundations can be built.