A post by Shannon Williams, PhD student on the Compass programme.

My PhD focuses on the application of statistical methods to volcanic hazard forecasting. This research is jointly supervised by Professor Jeremy Philips (School of Earth Sciences) and Professor Anthony Lee.

Introduction to probabilistic volcanic hazard forecasting

When an explosive volcanic eruption happens, large amounts of volcanic ash can be injected into the atmosphere and remain airborne for many hours to days from the onset of the eruption. Driven by atmospheric wind, this ash can travel up to thousands of kilometres downwind of the volcano and cause significant impacts on agriculture, infrastructure, and human health. Notably, a series of explosive eruptions from Eyjafjallajökull, Iceland between March and June 2010 resulted in the highest level of air travel disruption in Northern Europe since the Second World War, with the 108,000 flights cancelled and 1.7 billion USD in revenue lost.

The challenging task of forecasting volcanic ash dispersion is complicated by the chaotic nature of atmospheric wind fields and the lack of direct observations of ash concentration obtained during eruptive events.

Ensemble modelling

In order to construct probabilistic volcanic ash hazard assessments, volcanologists typically opt for an ensemble modelling approach in order to filter out the uncertainty in the meteorological conditions. This approach requires a model for the simulation of atmospheric ash dispersion, given eruption source conditions and meteorological inputs (referred to as forcing data). Typically a deterministic simulator such as FALL3D [1] is used, and so the uncertainty in the output is a direct consequence of the uncertainty in the inputs. To capture the uncertainty in the meteorological data, volcanologists construct an ensemble of simulations where the eruption conditions remain constant but the forcing data is altered, and average over the ensemble to produce an ash dispersion forecast [2].

Whilst this is a statistical approach by its construction and the objective is to provide probabilistic forecasts, predictions are often stated without providing a measure of the uncertainty in these predictions; furthermore, the ensemble size is typically decided on a pragmatic basis without consideration given to the magnitude of the probabilities of interest and the desired confidence in these probabilities. Much of my research since the beginning of my PhD has been on ways to enumerate these probabilities and their uncertainty, and on methods of deciding the ensemble size and reducing the uncertainty (variance) of the probability estimates.

Ash concentration exceedance probabilities

A common exercise in volcanic ash hazard assessment is the computation of ash concentration exceedance probabilities [3]. Given an eruption scenario, which we provide to the model by defining inputs such as the volcanic plume height and the mass eruption rate, what is the probability that the ash concentration at some location exceeds a pre-defined threshold? For example, how likely is it that the ash concentration at some flight level near Heathrow airport exceeds 500 for 24 hours or more? This is the sort of question that aviation authorities seek answers to, in order to ensure that flights can take off safely in the event of an eruption similar to that in 2010.

Exceedance probability estimation

To construct an ensemble for exceedance probability estimation, we draw inputs

from a dataset of historical meteorological data. For each

, the output of the simulator is obtained by applying some function

to

. Then, for the location of interest, the maximum ash concentration that persists for more than 24 hours is

, the result of applying some other function

to

. For a threshold

, we represent the event of exceedance by

,

where denotes the indicator function.

takes value 1 if the threshold is exceeded, and 0 otherwise.

is a Bernoulli random variable which depends implicitly on

,

and

, with success parameter

,

representing the exceedance probability. As the are independent and identically distributed random variables with mutual mean

and variance

, we estimate

via the sample mean of

,

,

which provides an unbiased estimate of with variance

.

Quantifying the uncertainty

This variance can be estimated by replacing with

and used to obtain confidence intervals for the value of

. Since the variance is inversely proportional to

, increasing the ensemble size will naturally reduce the variance and decrease the width of this confidence interval. For example, an approximate 95% confidence interval for

would be

.

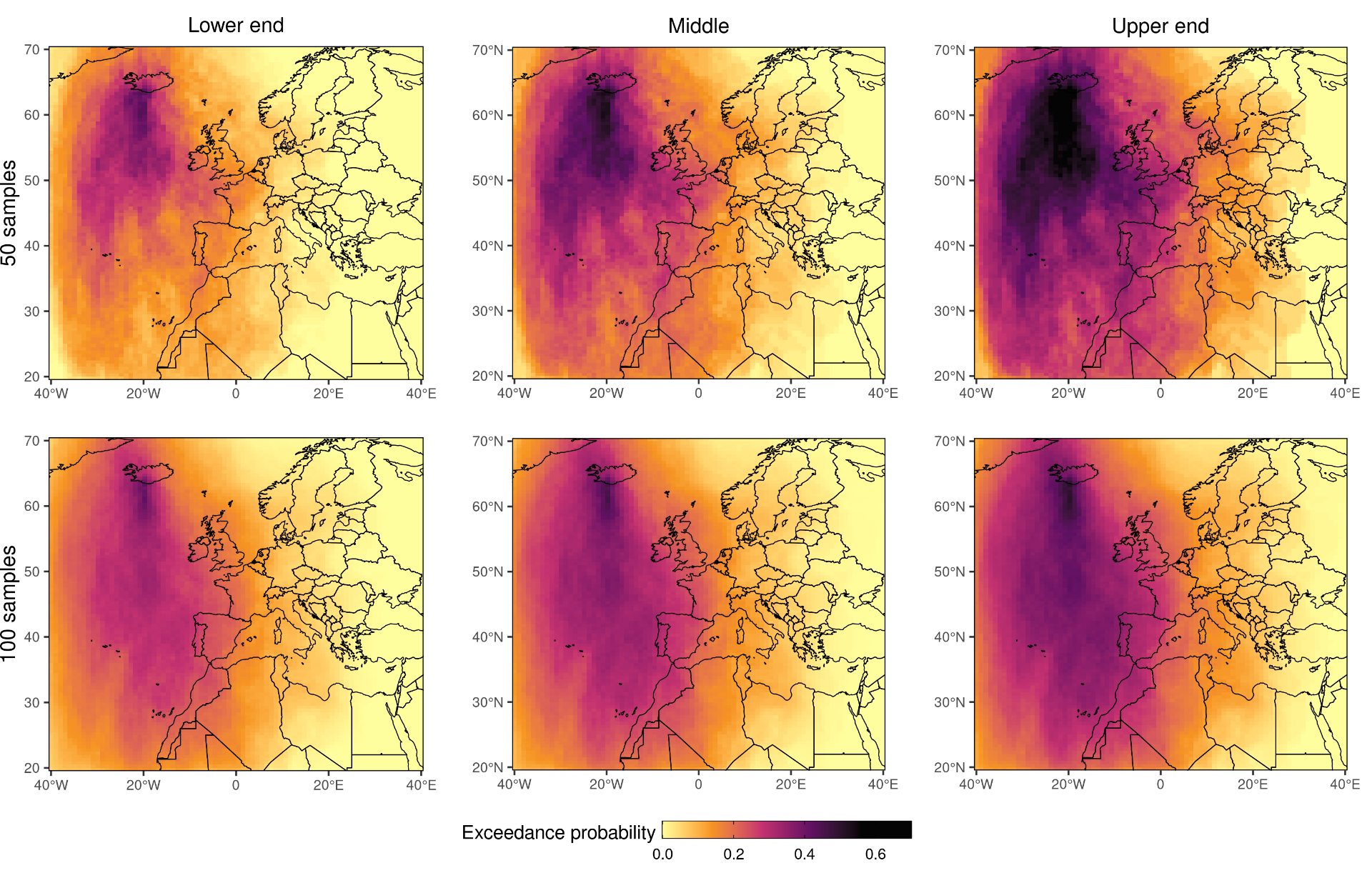

The figure below compares 95% confidence intervals for the probability of exceeding 500 for 24 hours or more given a VEI 4 eruption from Iceland, for ensemble sizes of 50 and 100. The middle maps are the exceedance probability estimates, with the lower and upper ends of the confidence interval on the left and right, respectively.

Commonly we would want to visualise estimates of probabilities of different magnitudes, corresponding to different thresholds, alongside each other. In this situation it is beneficial to view them on a logarithmic scale and provide confidence intervals for

Commonly we would want to visualise estimates of probabilities of different magnitudes, corresponding to different thresholds, alongside each other. In this situation it is beneficial to view them on a logarithmic scale and provide confidence intervals for . Given

, a 95% confidence interval for

is

.

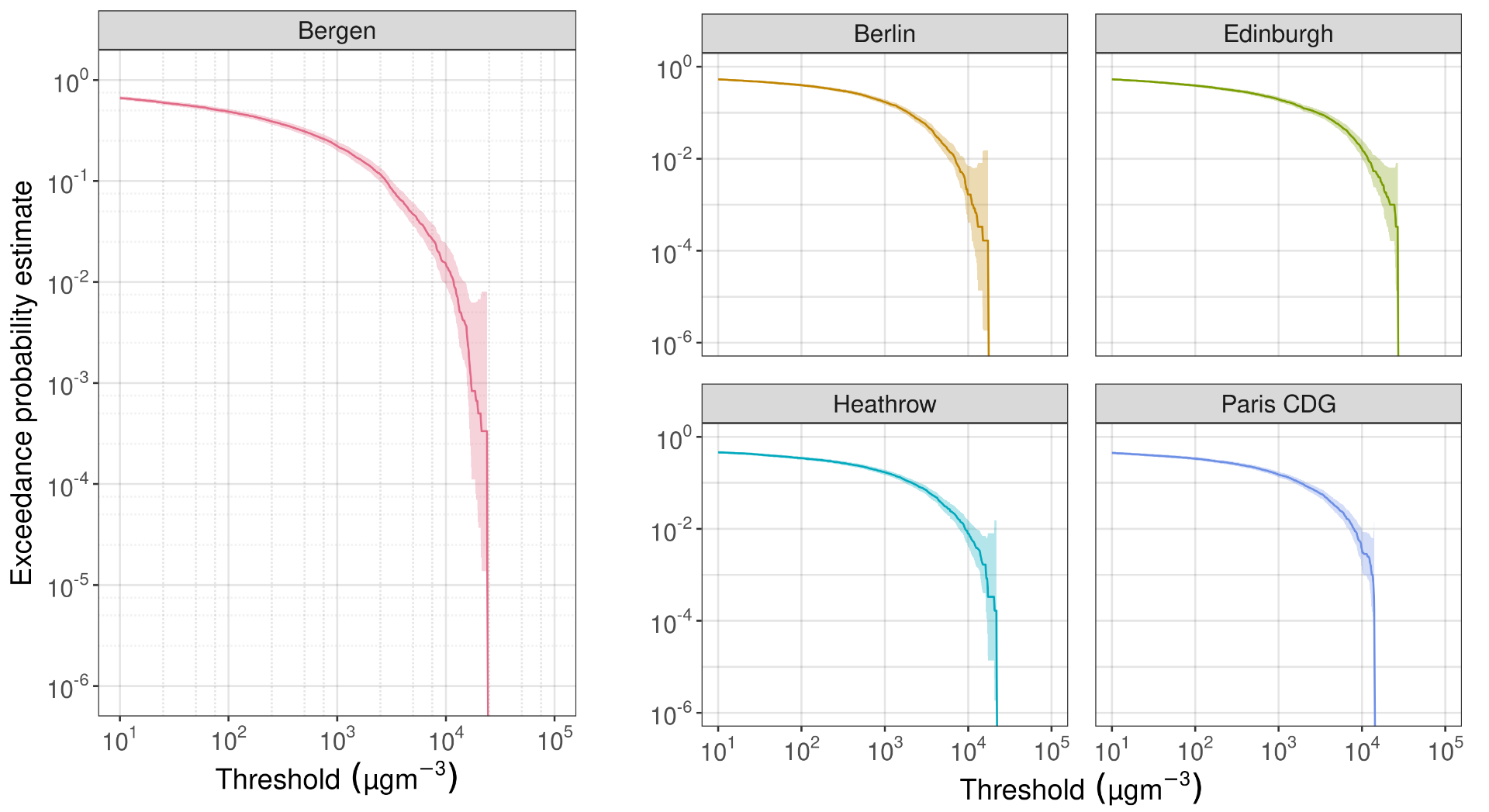

The figure below illustrates exceedance probability estimates with 95% confidence intervals versus threshold for a number of locations in Europe on a double logarithmic scale, based on an ensemble size of 6000.

Choosing the ensemble size

Clearly, as the ash concentration threshold increases the probability of exceeding that threshold gets smaller. Fewer exceedance events will be seen in the ensemble than for lower thresholds, and the size of the confidence interval will be larger when compared with the magnitude of the probability itself.

It seems sensible to set the ensemble size based on the magnitude of the lowest probability of interest, and a natural way to do this is to consider the expected number of exceedances given :

.

Then, if we want to observe or more exceedances, we should set

. If the lowest probability of interest is

, we need

to expect at least one exceedance event.

However, this approach does not guarantee that estimates of low-probability events will have low variances. Noting that the variance will be small for small

but large compared to

if

is small, we instead consider the relative variance,

,

which is the variance of the ratio of and

. Choosing

such that the relative variance is bounded above by 1 for the lowest value of

that is of interest thus ensures that the squared deviation from the mean of the estimate is no greater than the squared probability itself. So, for some

, we would set

to obtain a minimum sample size of

.

Furthermore, since is exactly the quantity in the square-root term of the confidence interval for

, this approach has the additional benefit of directly reducing the width of the confidence interval for

.

Further work

Further work I have carried out on this topic so far has been an investigation into alternative sampling schemes for reducing the computational cost of constructing an ensemble, as well as variance reduction methods.

References

[1] Folch, A., Costa, A., and Macedonio, G. FALL3D: A computational model for transport and deposition of volcanic ash. In: Computers and Geosciences 35,6, 2009, pp. 1334-1342.

[2] Bonadonna, C. et al. Probabilistic modeling of tephra dispersal: Hazard assessment of a multiphase rhyolitic eruption at Tarawera, New Zealand. In: Journal of Geophysical Research: Solid Earth 110.B3, 2005.

[3] Jenkins, S. et al. Regional ash fall hazard I: a probabilistic assessment methodology. In: Bulletin of volcanology 74.7, 2012, pp. 1699-1712.