A post by Josh Givens, PhD student on the Compass programme.

Density ratio estimation is a highly useful field of mathematics with many applications. This post describes my research undertaken alongside my supervisors Song Liu and Henry Reeve which aims to make density ratio estimation robust to missing data. This work was recently published in proceedings for AISTATS 2023.

Density Ratio Estimation

Definition

As the name suggests, density ratio estimation is simply the task of estimating the ratio between two probability densities. More precisely for two RVs (Random Variables) on some space

with probability density functions (PDFs)

respectively, the density ratio is the function

defined by

.

Density ratio estimation (DRE) is then the practice of using IID (independent and identically distributed) samples from and

to estimate

. What makes DRE so useful is that it gives us a way to characterise the difference between these 2 classes of data using just 1 quantity,

.

The Density Ratio in Classification

We now give demonstrate this characterisability in the case of classification. To frame this as a classification problem define and

by

. The task of predicting

given

using some function

is then our standard classification problem. In classification a common target is the Bayes Optimal Classifier, the classifier

which maximises

We can write this classifier in terms of

as we know that

where

is the indicator function. Then, by the total law of probability, we have

Hence to learn the Bayes optimal classifier it is sufficient to learn the density ratio and a constant. This pattern extends well beyond Bayes optimal classification to many other areas such as error controlled classification, GANs, importance sampling, covariate shift, and others. Generally speaking, if you are in any situation where you need to characterise the difference between two classes of data, it’s likely that the density ratio will make an appearance.

Estimation Implementation – KLIEP

Now we have properly introduced and motivated DRE, we need to look at how we can go about performing it. We will focus on one popular method called KLIEP here but there are a many different methods out there (see Sugiyama et al 2012 for some additional examples.)

The intuition behind KLIEP is simple: as , if

is “close” to

then

is a good estimate of

. To measure this notion of closeness KLIEP uses the KL (Kullback-Liebler) divergence which measures the distance between 2 probability distributions. We can now formulate our ideal KLIEP objective as follows:

where represent the KL divergence from

to

. The constraint ensures that the right hand side of our KL divergence is indeed a PDF. From the definition of the KL-divergence we can rewrite the solution to this as

where

is the solution to the unconstrained optimisation

As this is now just an unconstrained optimisation over expectations of known transformations of , we can approximate this using samples. Given samples

from

and samples

from

our estimate of the density ratio will be

where

solves

Despite KLIEP being commonly used, up until now it has not been made robust to missing not at random data. This is what our research aims to do.

Missing Data

Suppose that instead of observing samples from , we observe samples from some corrupted version of

,

. We assume that

so that either

is missing or

takes the value of

. We also assume that whether

is missing depends upon the value of

. Specifically we assume

with

not constant and refer to

as the missingness function. This type of missingness is known as missing not at random (MNAR) and when dealt with improperly can lead to biased result. Some examples of MNAR data could be readings take from a medical instrument which is more likely to err when attempting to read extreme values or recording responses to a questionnaire where respondents may be more likely to not answer if the deem their response to be unfavourable. Note that while we do not see what the true response would be, we do at least get a response meaning that we know when an observation is missing.

Missing Data with DRE

We now go back to density ratio estimation in the case where instead of observing samples from we observe samples from their corrupted versions

. We take their respective missingness functions to be

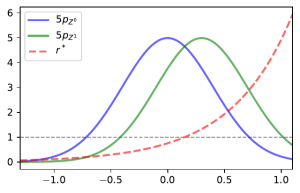

and assume them to be known. Now let us look at what would happen if we implemented KLIEP with the data naively by simply filtering out the missing-values. In this case, the actual density ratio we would be estimating would be

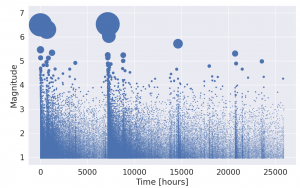

and so we would get inaccurate estimates of the density ratio no matter how many samples are used to estimate it. The image below demonstrates this in the case were samples in class are more likely to be missing when larger and class

has no missingness.

Our Solution

Our solution to this problem is to use importance weighting. Using relationships between the densities of and

we have that

As such we can re-write the KLIEP objective to keep our expectation estimation unbiased even when using these corrupted samples. This gives our modified objective which we call M-KLIEP as follows. Given samples from

and samples

from

our estimate is

where

solves

This objective will now target even when used on MNAR data.

Application to Classification

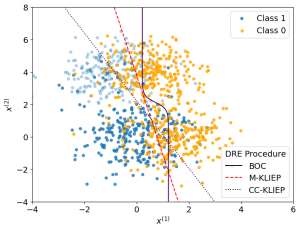

We now apply our density ratio estimation on MNAR data to estimate the Bayes optimal classifier. Below shows a plot of samples alongside the true Bayes optimal classifier and estimated classifiers from the samples via our method M-KLIEP and a naive method CC-KLIEP which simply ignores missing points. Missing data points are faded out.

As we can see, due to not accounting for the MNAR nature of the data, CC-KLIEP underestimates the true number of class 1 samples in the top left region and therefore produces a worse classifier than our approach.

Additional Contributions

As well as this modified objective our paper provides the following additional contributions:

- Theoretical finite sample bounds on the accuracy of our modified procedure.

- Methods for learning the missingness functions

.

- Expansions to partial missingness via a Naive-Bayes framework.

- Downstream implementation of our method within Neyman-Pearson classification.

- Adaptations to Neyman-Pearson classification itself making it robust to MNAR data.

For more details see our paper and corresponding github repository. If you have any questions on this work feel free to contact me at josh.givens@bristol.ac.uk.